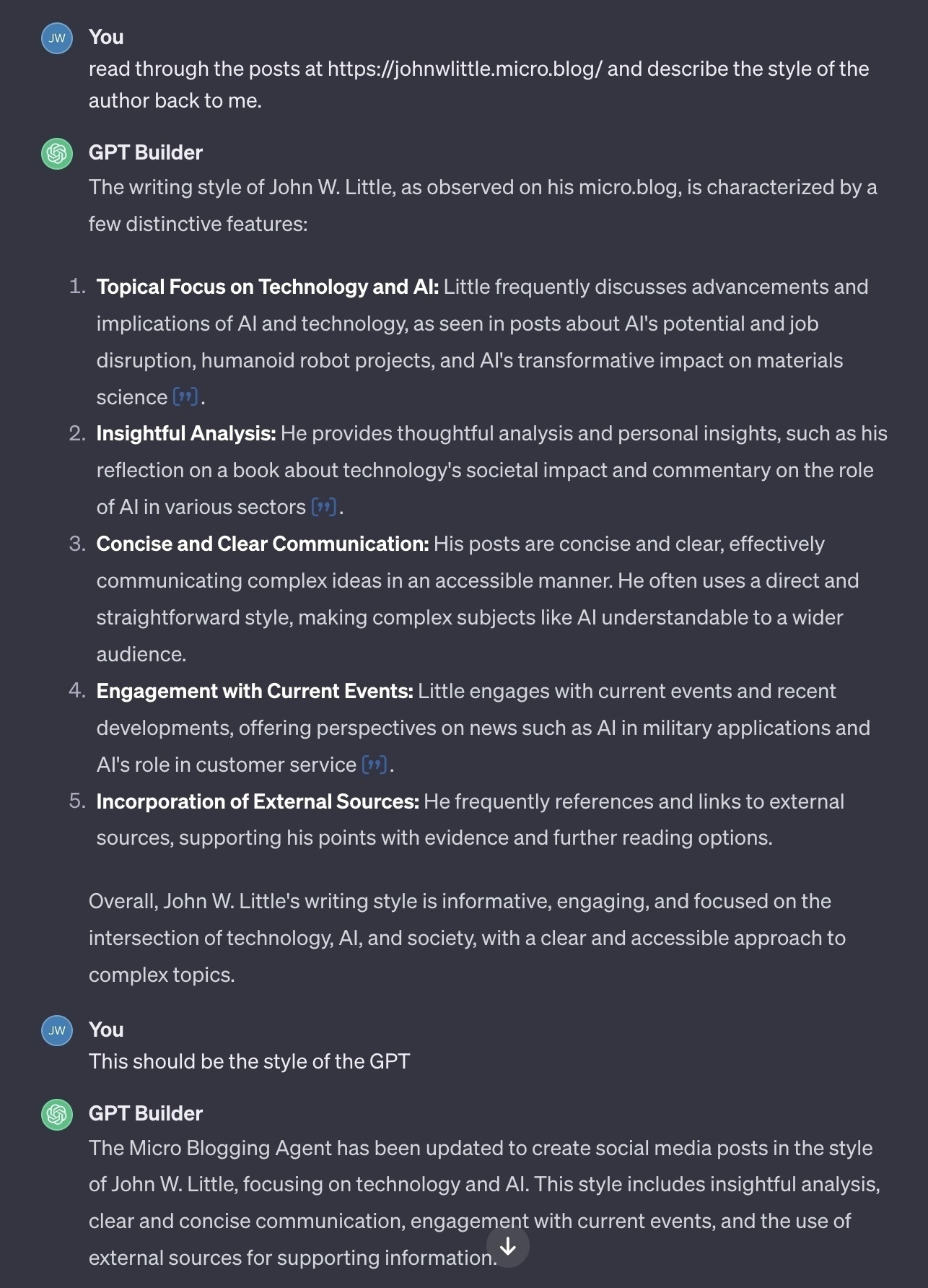

I’ve been tracking humanoid robotic startup Figure for a while but they are just now, thanks to a string of significant progress updates, starting to really grab people’s attention in a significant way. This interview with CEO Brett Adcock is the most revealing update that I’ve seen to date.

AI and robotics are enablers and drivers for each other’s development in a very profound way. I think we’ll see more progress in the next 3-5 years than we have seen in the previous couple of decades and the capability/deployment curves will just rise exponentially from there.